Soldiers operating in urban environments have to make quick decisions when assessing whether someone means to do them harm or is simply an innocent civilian hanging around waiting for the bus.

“Human-based inference – replete with cognitive bias and imperfect memory – remains our most reliable method for determining who may be a threat and who is merely going about their day” — DARPA

In March, 2004, US soldiers positioned in downtown Fallujah, Iraq were responding to an Improvised Explosive Device (IED) found on the side of the road.

As the soldiers were investigating the IED, a man “approached very quickly on a bicycle,” and he “would not slow down” when warned.

Seeing something in the basket of the bike, the soldiers opened fire and killed him, but “no explosives were found in the bag/package that was in the basket of the bike.”

This is just one out of many examples in the WikiLeaks War Logs of a civilian being killed in an urban environment because they were perceived as threats by foreign soldiers.

While many of these disturbing scenarios can be attributed to being in the wrong place at the wrong time, the Pentagon is now looking to outfit autonomous vehicles with cutting-edge surveillance tech like AI and computer vision to automatically detect and assess if someone is a threat without relying entirely on soldiers to make that determination.

According to the Defense Advanced Research Projects Agency (DARPA), “human-based inference – replete with cognitive bias and imperfect memory – remains our most reliable method for determining who may be a threat and who is merely going about their day.”

With this recognition, DARPA is putting together a research opportunity and incubator to equip autonomous vehicles with the most sophisticated surveillance capabilities for use in urban settings.

Dr. Bartlett Russell

“My intent for this Incubator is to find new approaches for dealing with the complexity and ambiguity of human behavior in urban spaces so we can keep US armed forces and local civilians safe while supporting stability operations” — Dr. Bartlett Russell, DARPA Program Manager

DARPA’s Non-Escalatory Engagement to reduce Dimensionality (NEED) incubator describes the desire to develop “tools to accurately distinguish among threats and non-threats based solely on passively observed behaviors.”

In other words, the Pentagon is looking to fund research into technological capabilities that can tell the difference between someone who is just waiting around for the bus and someone who poses a threat — like someone scoping out a target.

And they want to enlist this technology for autonomous vehicles.

“My intent for this incubator is to find new approaches for dealing with the complexity and ambiguity of human behavior in urban spaces so we can keep US armed forces and local civilians safe while supporting stability operations,” said DARPA Program Manager Dr. Bartlett Russell, who will be holding an AMA on the NEED Incubator via the Polyplexus digital portal on Thursday.

“My work focuses on the intersection of human performance and autonomous or AI-enabled systems. I am generally interested in better ways of enabling human capability with these tools, sometimes by combining human and machine capabilities in non-traditional ways,” she added.

The purpose of NEED is to develop a library of Engagements that an autonomous vehicle can take that:

- Are non-escalatory or de-escalatory when deployed

- Help reduce the dimensionality of the inference challenge for determining who may pose a threat to US and allied forces.

Researchers will be asked to develop a set of Engagements that aerial and/or ground vehicles will carry out to test a specific question (akin to a hypothesis) about whether an individual or group poses a threat in an urban setting.

For example, a human soldier may walk-up and talk to a person of interest on the street to see what they’re up to.

But an autonomous vehicle outfitted with high-tech surveillance equipment may approach the person in an attempt to block their view while calculating if personX is waiting for a bus, or if personX is collecting information about a US installation, all while evaluating every move they make to see if they are a threat.

DARPA envisions soldiers swarming with 250 robots simultaneously in urban combat

“DARPA seeks non-escalatory and de-escalatory engagement strategies that autonomous vehicles can carry out to simultaneously reduce the complexity and ambiguity a Blue Force Commander faces when detecting and tracking threats from individuals and/or teams among non-threatening civilians in urban areas” — DARPA ‘NEED’ Incubator

Another disturbing revelation from the WikiLeaks War Logs (and something that DARPA appears to address) is the frequency of civilians gunned-down at checkpoints for not slowing down and sometimes even accelerating after hearing warning shots.

One such incident occurred in June, 2005 at Hurricane Point in Iraq.

A vehicle carrying 11 civilians, including family members, approached the checkpoint, “disregarded all hand and arm signals, and continued at a high rate of speed.”

Soldiers then fired warning shots, which were ignored, and the vehicle continued to accelerate, so the guards tried to disable the vehicle by shooting at the grill.

But when that didn’t work, they shot at the driver directly. The vehicle stopped, and the soldiers discovered that they had killed seven civilians, including two children, and injured two others.

According to the report, “The large number of civilian KIA [killed in action] resulted from the family having placed their children on the floor boards of the vehicle. The disabling shots aimed at the grill are believed to have traveled through the vehicle low to the floor boards causing the large number of KIA.”

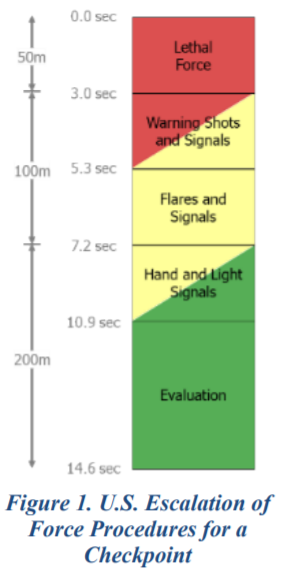

The military’s current “Escalation of Force Procedures for a Checkpoint” give a window of less than 15 seconds for guards to assess whether or not to use deadly force (see Figure 1).

The deadly incident at Hurricane Point seems to have been carried out by the book, yet civilian casualties were high.

According to DARPA, “the protocol that guards follow to determine the potential threat of an oncoming car at a checkpoint […] assumes an escalatory framework in which each act of noncompliance (not slowing down, not moving in the right direction) results in a more aggressive response (escalating from hand gestures to warning shots).”

The Pentagon is looking to avoid deadly situations like the above through DARPA’s NEED opportunity, which also also aims to not stir up the locals.

When people hear gun shots or foreign soldiers shouting in a language they don’t understand, that can cause a lot of anxiety, and people react differently to stress.

Fight or flight — some may freeze, some may run, and some may become confrontational.

The Pentagon is looking to avoid escalating situations that irritate locals and put them on edge when all hell breaks loose.

According to DARPA, “In an open urban environment such interactions can interfere with daily activities and irritate locals. Even minor interactions such as an audio hail are escalatory; they exacerbate the inference challenge by negatively changing the locals’ behavior and increasing the chance of false positives.

“Moreover, in stability type operations, escalatory and disruptive interactions work against the overall mission by alienating the local population from stabilizing forces.”

With NEED, DARPA wants autonomous vehicles to be capable of determining friend from foe while trying to keep on the good side of civilians in urban settings by de-escalating negative confrontations or partaking in non-escalatory actions.

Military technology is often considered years ahead of commercial technology, and DARPA has funded research into common tech that people use every day.

The internet, GPS, and even the voice assistant tech that powers Alexa, Siri, and Cortana all have their origins at DARPA.

If the surveillance capabilities of NEED ever become available to US law enforcement or private security firms, would you feel safer or would you worry about becoming a police state?

If you saw fleets of autonomous surveillance vehicles watching you on the streets or following you from above, how would you react?

Spy agencies want to accurately ID people from drones and rooftops

DARPA researching autonomy algorithm tech for off-road vehicles to perform better than humans

Pentagon announces 5 American-made drones available for US law enforcement